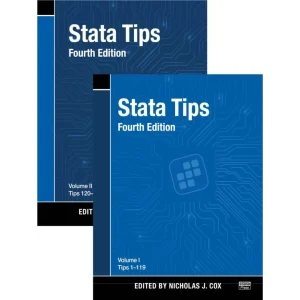

The Elements of Statistical Learning: Data Mining, Inference, and Prediction, Second Edition (Springer Series in Statistics)

Original price was: $103,99.$19,99Current price is: $19,99.

- 100% Satisfaction Guaranteed!

- Immediate Digital Delivery

- Download Risk-Free

✔️ Digital file type(s): 𝟏𝐏𝐃𝐅

This book describes the important ideas in a variety of fields such as medicine, biology, finance, and marketing in a common conceptual framework. While the approach is statistical, the emphasis is on concepts rather than mathematics. Many examples are given, with a liberal use of colour graphics. It is a valuable resource for statisticians and anyone interested in data mining in science or industry. The book’s coverage is broad, from supervised learning (prediction) to unsupervised learning. The many topics include neural networks, support vector machines, classification trees and boosting—the first comprehensive treatment of this topic in any book.

This major new edition features many topics not covered in the original, including graphical models, random forests, ensemble methods, least angle regression & path algorithms for the lasso, non-negative matrix factorisation, and spectral clustering. There is also a chapter on methods for “wide” data (p bigger than n), including multiple testing and false discovery rates.

50 reviews for The Elements of Statistical Learning: Data Mining, Inference, and Prediction, Second Edition (Springer Series in Statistics)

You must be logged in to post a review.

S. Matthews –

This is a quite interesting, and extremely useful book, but it is wearing to read in large chunks. The problem, if you want to call it that, is that it is essentially a 700 page catalogue of clever hacks in statistical learning. From a technical point of view it is well-ehough structured, but there is not the slightest trace of an overarching philosophy. And if you don’t actually have a philosophical perspective in place before you start, the read you face might well be an even harder grind. Be warned.

Some of the reviews here complain that there is too much math. I don’t think that is an issue. If you have decent intuitions in geometry, linear algebra, probability and information theory, then you should be able to cruise through and/or browse in a fairly relaxed way. If you don’t have those intuitions, then you are attempting to read the wrong book.

There were a couple of things that I expected (things I happen to know a bit about), but that were missing. On the unsupervised learning side, the discussion of Gaussian mixture clustering was, I thought, a bit short and superficial, and did not bring out the combination of theoretical and practical power that the method offers. On the supervised learning side, I was surprised that a book that dedicates so much time to linear regression finds no room for a discussion of Gaussian process regression as far as I could see (the nearest point of approach is the use of Gaussian radial basis functions [oops: having written that, I immediately came across a brief discussion (S5.8.1) of, essentially, GP regression – though with no reference to standard literature]).

Craig Garvin –

This is one of the best books in a difficult field to survey and summarize. Like ‘Pattern Recognition’, ‘Statistical Learning’ is an umbrella term for a broad range of techniques of varying complexity, rigor and acceptance by practitioners in the field. The audience for such a text ranges from the user requiring a code library to the mathematician seeking proof of every statement. I sit somewhere in the middle, but more towards the mathematical end. I subscribe to the traditional statistician’s view of Machine Learning. It is a term invented in order to avoid having to prove theorems and dodge the rigors of ‘real’ statistics. However, I strongly support such a course of action. There is an immense need for Machine Learning algorithms, whether they have actual properties or not, and an equal need for books to introduce these topics to people like myself who have a strong mathematical background, but have not been exposed to these techniques.

Hastie & Tibshirani has the most post-it’s of any book on my shelf. When my company built an custom multivariate statistical library for our targeted product, we largely followed Hastie & Tibshirani’s taxonomy. Their overview of support vector machines is excellent, and I found little of value to me in dedicated volumes like Cristianini & Shawe-Taylor that wasn’t covered in Hastie & Tibshirani. Hastie & Tibshirani is another book with excellent visual aides. In addition to some great 2-D representations of complex multidimensional spaces, I thought the ‘car going up hill’ icon was a very useful cue that the level was going up a notch.

Having praised this book, I can’t argue with any of the negative reviews. There is no right answer of where to start or what to cover. This book will be too mathematical for some, insufficiently rigorous for others, but was just right for me. It will offer too much of a hodge-podge of techniques, miss someone’s favorite, or offer just the right balance. In the end, it was the best one for me, so if you’re like me (someone with a very solid math base, not a mathematician, who appreciates rigor, but isn’t married to it, and who is looking to self-start on this topic.) you’ll like it.

Zhan Shi –

This edition adds some essential features for supervised statistical learning, such as supervised principle components, which I find fairly useful.

Governor –

I originally bought this book for coverage of some topics about which I know nothing. But having had it for just a week, I have been more impressed with what I have learned about stuff that I thought I knew. The material in Chapter 7 is one of several cases in point. The discussion of cross validation is great, and the way it is related to other aspects of the model selection problem is masterly. More generally, the book manages a nice balance between theory and application, and its scope is truly impressive. Thanks to authors and publisher.

GM –

The book is excellent if you want to use it as a reference and study machine learning by yourself. It’s quite comprehensive and deep in areas in which authors are most familiar & famous (frequentist approach, ensemble techniques, maximum likelihood and its variations, lasso). I would recommend you Bishop’s machine learning book as an alternative if you want to gain a deeper understanding of Bayesian techniques–that one is more readable as well. Hastie et al’s book is just ok from a didactic point of view. The real world examples are complicated to follow (would prefer simpler synthetic data sets). Some descriptions & explanations are too terse–a price to pay for comprehensiveness in a small volume. Overall, a great effort and useful contribution. You’ll most likely need to check out other sources to gain a deeper understanding of some of the topics discussed. See MIT machine learning lecture notes (available online).

Dr. Houston H. Stokes –

“The Elements of Statistical Learning: Data Mining, Inference and Prediction,” 2nd edition by Trevor Hastie, Robert Tibshirani and Jerome Friedman is the classic reference for the recent developments in machine learning statistical methods that have been developed at Stanford and other leading edge universities. Their book covers a broad range of topics and is filled with applications. Much new material has been added since the first edition was published in 2001. Since most of these procedures have been implemented in the open-source program R, this book provides a basic and needed reference for their application. Important estimation procedures discussed include MARS, GAM, Projection Pursuit, Exploratory Projection Pursuit, Random Forest, General Linear Models, Ridge Models and Lasso Models etc. There is an discussion of bagging and boosting and how these techniques can be used. There is an extensive index and the many of the datasets discussed are available from the web page of the book or from other sources on the web. Each chapter has a number of problems that test mastery of the material. I have used material from this book in a number of graduate classes at the University of Illinois in Chicago and have implemented a number of the techniques in my software system B34S. While the 1969 book by Box and Jenkins set the stage for time series analysis using ARIMA and Transfer Function Models, Hastie, Tibshirani and Friedman have produced the classic reference for a wide range of new and important techniques in the area of Machine Learning. For anyone interested in Data Mining this is a must own book.

M. D. HEALY –

This should certainly not be the first statistics book you read, or even the second or third book, but when you are ready for it then you should absolutely read it. But be prepared to read it very slowly and digest each page. Its greatest strength is that it shows how much of modern statistics comes down to a few fundamental issues: bias, variance, model complexity, and the curse of dimensionality. There is no free lunch in statistics, methods that claim to avoid these tradeoffs only do so by adding more assumptions about the structure of your data. If your data match the assumptions of such methods, you gain statistical power, but if your data don’t match the assumptions then you lose.

By looking closely at the assumptions, the book shows how many contemporary methods that look different are fundamentally similar under the hood.

And in my own work I have adopted their use of open circles for the points in scatterplots. These circles are easier to see than tiny solid dots, but overlapping symbols don’t cover each other the way large filled symbols do.

AJ –

ここ10年ほど、

統計的学習理論の分野では

Lasso推定などの正則化推定が非常に流行しています。

これは

近年の計算機技術の発展に伴う

新しいタイプのデータ解析の際に

非常に重要となるものであり、

私も勉強・研究しています。

この本の著者3人、

Hastie, Tibshirani, Friedman

はこの分野のフロンティアであり、

本の内容としても

統計的学習理論の内容を広くカバーしたものとなっており、

非常に有益な本です。

まぁ

確かに広範囲をカバーしているために

一つひとつのトピックは浅くなってしまってますが、

そもそも

この分野全体をカバーした上で

詳しい理論展開を載せるなんて

明らかに不可能。

それに

この本が読める人は

原著論文も読める人のハズ。

そして

深い理論が知りたいのであれば

論文を読むに越したことはありません。

というわけで

この本の使い方としましては

「トピックと概要を抑える」

というのをオススメします。

そして

もしこの本に興味を持たれた方は

この本を基に

原著論文を読まれることを期待します。

andreas –

This book is one of the classics when it comes to the field of statistics and data mining. It provides a good mix of theory and practice in a concise manner – for statisticians and mathematicians at least.

The good teaching will make you understand the concepts of a huge variety of methods. Digging deeper you will probably need to consult a more specialized source for the particular method of interest.

Take a look at the table of contents for an overview.

The color print makes the book very visually appealing.

Note that the book can now just be downloaded!

[…]

Lep –

This is a great book for someone with already some background in statistics, but also for the complete novice in learning theory.

D. Morrison –

Especially interesting are the chapters covering biotech. Familiarity with statistics (101/Introductory Level) is assumed. It’s a great intermediate-level survey for an undergrad, or anyone with a new interest in the area.

“Learning” in this book’s context is really about the collection of statistics, which is akin to mentioning the “AI” buzzword. The bottom-line is that a human must design, and at least post-process/post-supervise the collection of the statistics for real work to get done; so don’t think you’ll be designing super robots after reading this book that have a soul. 😉

I thoroughly enjoyed the book, and I probably would even more if I had a Masters in Statistics.

Matt Grosso –

This review is written from the perspective of a programmer who has sometimes had the chance to choose, hire, and work with algorithms and the mathematician/statisticians that love them in order to get things done for startup companies. I don’t know if this review will be as helpful to professional mathematicians, statisticians, or computer scientists.

The good news is, this is pretty much the most important book you are going to read in the space. It will tie everything together for you in a way that I haven’t seen any other book attempt. The bad news is you’re going to have to work for it. If you just need to use a tool for a single task this book won’t be worth it; think of it as a way to train yourself in the fundamentals of the space, but don’t expect a recipe book. Get something in the “using R” series for that.

When it came out in 2001 my sense of machine learning was of a jumbled set of recipes that tended to work in some cases. This book showed me how the statistical concepts of bias, variance, smoothing and complexity cut across both fields of traditional statistics and inference and the machine learning algorithms made possible by cheaper cpus. Chapters 2-5 are worth the price of the book by themselves for their overview of learning, linear methods, and how those methods can be adopted for non-linear basis functions.

The hard parts:

First, don’t bother reading this book if you aren’t willing to learn at least the basics of linear algebra first. Skim the second and third chapters to get a sense for how rusty

your linear algebra is and then come back when you’re ready.

Second, you really really want to use the SQRRR technique with this book. Having that glimpse of where you are going really helps guide you’re understanding when you dig in for real.

Third, I wish I had known of R when I first read this; I recommend using it along with some sample data sets to follow along with the text so the concepts become skills not just

abstract relationships to forget. It would probably be worth the extra time, and I wish I had known to do that then.

Fourth, if you are reading this on your own time while making a living, don’t expect to finish the book in a month or two.

VD –

Here are my impressions about the book.

* The organization of book is clear and concise. No too lengthy and fuzzy discussions. Method and technique explanations are clear though sometimes bit hard to follow.

* Liberal usage of colors. It helps to make drawings more attractive and to show more info. May be too bright for some.

* My parts like SVM and LDA, PCA…. they seem to be explained in quite difficult way. Though it is clear enough and self-sufficient

* There are many references and pointers to original works and related fields. Big plus here.

* Work and results on real sample data. I particularly enjoy it! It helps to better understand each method and its +/-

This is a good book for those who use statistics for data mining. It seems that there is more accent on probabilistic (frequentist) field. Though a reader is expected to have good enough level in maths to grasp the concepts quickly. May not be appropriate for the beginners in the field.

Futures Guy –

In 2009, the second edition of the book added new chapters on random forests, ensemble learning, undirected graphical models, and high dimensional problems. And now, thanks to an agreement between the authors and the publisher, a PDF version of the 2nd edition is now available for free download. […]

Cannon Gray LLC –

Anyone working in data mining and predictive analytics or in applied statistics in other areas should have this on their shelf. It is well written and very comprehensive. Not light reading but not a pure math stat book either. It helped bring me up to date on many of the recent developments in machine learning and applied statistics. An excellent reference.

Kaushik Ghose –

I would recommend this book to those who need to use machine learning. It is great as a reference book. It’s compact style means that it will most benefit those with a background of linear algebra (matrices) and some calculus. It may not be the best book to start learning these techniques from scratch.

A curious reader –

The book is not for eveyone as it can be seen from other reviews. There are other books out there. I guess it’s all about the right fit. I found this book most usefull in my case. My background: MA in math and PhD in economics. I was looking for a book that would give me a good overview of modern machine learning methods. What I like most about this book is that the authors try to give some uniform perspective on the covered methods. As a result, I’ve also got a better understanding of what I learned in my statistics and metrics classes back in school.

Silvia Lauble –

This is a great book for those who want to understand the concepts behind the main statistical learning techniques at the time.

The second edition does add important chapters on topics that have grown since the first edition was done.

abg –

評価はできない.ただ換えがたいものです.

Sergey –

I’m experienced data miner and this book is really helpful. It might seem tough for newbies though. But very useful if you are trying to figure out pros and cons of different methods.

ram –

This book is used by many machine learning courses. It is used in the Stanford grad program, which should give everyone enough understand of the authors targeted audience. Do not expect to sit down and just read it like a novel for a quick overview of statistical learning methods. Warning, expect some heavy duty math. In the interest of full disclosure I’ll repeat: expect mega-math. The authors claim you can read the book and avoid what they term “technically challenging” sections, but I’m not really sure how one would do that. The book presents just about every important ML technique from decision trees to neural nets and boosting to ensemble methods. The Bayesian neural nets are tons of fun.

You can download a pdf copy from the authors website to take a look at it, but a serious student in the subject really should get hardcopy. […]

Seth M. Spain –

This is an excellent book, but is probably most accessible to readers who already have a pretty solid grounding in statistics or applied mathematics. I have used it as background material for courses in advanced data analysis and computational statistics for PhD students in management, but I would generally not use at as a textbook for these students, because their typically mathematical preparation is too patchy. I do use it as a text for research assistants who work with me closely and develop a level of mathematical maturity.

That being said, this is a very comprehensive overview of the field, and is incredibly useful as a reference for statisticians and other professionals.

John Phillip Hilbert –

As many have pointed out, this is no beginner’s guide to data mining techniques. Rather it is like a graduate textbook. It covers most techniques with enough rigor to recreate the algorithm, modify them intelligently, or simply cite them in statistical detail. I think there is enough beginner guides out there, even on the internet, and I personally needed more a of more detailed reference (think Hamilton’s ‘Time Series Analysis’ or Jackson’s ‘Classical Electrodynamics’).

I cannot comment about any of the alternatives, however I am quite pleased with this book. I especially like how refined the layout is. The book is full of blue chapter and section headings in addition to many intelligently colored plots. Because it is more of a reference, it can be quite dry (I wouldn’t want it any other way), however the layout makes it a bit enjoyable. If you are looking for a good reference on this subject, just buy it and don’t look back (or fret over the cost).

Nicolas Jimenez –

This is a graduate level overview of key statistical learning techniques. I bought it as I am currently a student in Hastie’s graduate level stats course at Stanford. The book is fairly deep given it’s breadth, which is why it’s so long. It’s a very good book and an amazing reference but I do not recommend it unless you have strong mathematical maturity. If you do not know linear algebra / probability well, you will be lost. If you’re like me, you’ll also have to do some googling around for some of the stats stuff he talks about (confidence intervals, chi squared distribution…). I personally don’t mind this. The coverage of this book is truly amazing. Trevor talks about everything from the lasso and ridge regression to knn, SVM’s, ensemble methods, and random forests. It’s fun to just flip to a random page and just start reading. If you want to be an expert in this area, you need to have this book. On the other hand if you just want to know basic techniques and then apply them, this book might be overkill and you may be better served by a more elementary text.

SH –

I bought the book for as a reference for my team members.

They found the book very useful as they keep borrowing the book

hape –

Der Hastie ist ein solides Lehrbuch. Er ist sehr gut lesbar und angenehm gestaltet. Insbesondere Format und Typographie sind schöner Latex Satz ohne zu viel Eingriffen von Seiten des Verlages und von daher ist das Buch eine Augenweide.

Ich habe den (Frequentistischen) Hastie als Ergänzung zum Bayesianischen Bishop gekauft und nutze ihn meist ebenso, als Ergänzung. Hastie wählt den konservativen, frequentistischen Ansatz und ist damit näher an der “klassischen” kanonischen Statistik.

Leider finde ich oft die frequentistischen Ansätze an zentraler Stelle nicht motiviert im Hastie. Das erschwert das Nachvollziehen erheblich. Ein ausführliches Verständnis stellt sich dann eher am Ende eines Abschnitts ein, wenn man sieht wohin man mit dem kaum erläuterten Ansatz gekommen ist. Dafür werden die Ergebnisse oft schön erkläutert und erklärt, leider eben fehlt die Motivation am Anfang der Abschnitte oft.

An einigen Stellen ist der Hastie wesentlich verständlicher als der Bishop was auch am frequentistischen Ansatz liegt. Wo Bishop Verteilungen betrachtet, sind die Modelle im Hastie oft etwas simpler und dafür wesentlich leichter durchzurechnen.

Natürlich enthält der Hastie auch viele Algorithmen die Bishop nicht bespricht und andersrum.

Fazit. Der Hastie ist eine gute Einführung.

Jona –

This book is beautiful. It is definitely not an introductory book, but the effort is worth it. It should probably be read along with an open Wikipedia tab, for all the non-statistics wizards out there. In summary, this book takes effort to read, but it is a priceless jewel of knowledge in its field.

Thanh Nguyen –

The book is in a very bad condition.

Yu Feng –

A must read book for machine learning

Billy –

as desribed.

Matt Imig –

Fantastic!

Eiran –

best basic book there is

Sherry –

Good book, help me build a systematic knowledge on statistics and machine learning

Of course, you may need some basic knowledge before reading it if you are novice

Caihao Cui –

This book reviews many machine learning and data mining techniques, I am very happy to have this book, it help me a lot on my academic research.

andrew beaven –

excellent

GEORGE R. FISHER –

THE definitive source. Subsequent to getting this book I had the opportunity to take STATS315B with Jerome Friedman, one of the authors and a giant in the field, and I found it to be an absolute revelation.

Josh Weinflash –

Thumbs up

Amanda –

Good book. Download it. Don’t pay for it.

Yun-chiang Tai –

It is a book of the perfect combination of statistical thinking with machine learning. Therefore, it is called statistical learning.

Roberto Parra –

Hermoso Libro!

R. Ty. –

A very well-written, fairly comprehensive book. It may prove a bit difficult to follow for those without a background in statistics, engineering, or advanced mathematics.

Jieralice –

Good quality, color print

Bleep Bloop –

The book is great, excellent formal introduction to the methods. The graphics are also really excellent and helpful, and nicely colored.

My only complaint, because really the book content is 5 star quality, is that it was poorly bound. 2 pages have already just fallen out, 1 more and i’m sending the book back. I love springer series texts, and i’ve never had this issue before.

Matteo Fontana –

Questo volume è fondamentale per chiunque voglia approfondire le proprie basi (teoriche…) sull’apprendimento statistico.

Scritto dai titani del campo, è un libro omnicomprensivo che, partendo dalle basi (nei primi capitoli, probabilmente per introdurli in maniera strumentale alla trattazione sviluppata, vengono descritte le tecniche base di regressione e classificazione) arriva a descrivere concetti molto più complessi e avanzati, come le varie tecniche di regolarizzazione (Ridge, LASSO), il metodo di Benjamini-Hochberg, le SVM etc.

D Marx –

This book is basically the bible for statistical learning. I don’t know what I would do without it.

Keith Lancaster –

I already had the PDF of this book and was impressed, so I purchased the hard copy. Fantastic content, but as a book lover, I have to say the quality of the printed version is outstanding.

Tony Hong –

I have finished reading the book, but I feel somewhat confused…

Although I think I did pretty good with math in graduate school (Physics PhD from a good school), I find it hard to follow some of the derivations. The book specified the prerequisite level of one elementary course of statistics and some linear algebra. But I feel the actual requirement for math is much higher, especially in the multivariate statistics and matrix representations. I often find myself staring at the formulas and trying to get the big picture.

This book is particularly good in terms of grabbing the insights and intuitions underneath the different methods. It covers a vast majority of different techniques, and trying to present the ideas and intuitions why this method works or how this method is an implementation of a simpler idea, e.g.various obvious or hidden implementations of lasso regularization. This is impressive. No one can do better because the authors are actually leading researchers who either invented these ideas or made significant contributions to their development.

Unfortunately, this book is not a textbook for outsiders of machine learning research. The actual implementations/algorithm are not covered in detail, provided often as-is, and have weak links to the presented formulas. The indices or notations in the formulas are sometimes not mentioned, and some of the key concepts for understanding the principles are not introduced. I presume researchers in this field would usually have a common sense of what they are, but for someone like me, it is sometimes very difficult to follow.

Anyway, it is great to finish this book. I don’t know how much I could get from this book, but at least I have some feelings of how deep and how broad this field is, and know where to look if later I encounter problems. I would recommend an outside learner to step away from this book as a principle textbook and only use it as a reference. On the other hand, the book introduction to statistical learning by the same authors are highly recommended for new comers.

Guillaume Légaré –

Great value and price for this book. Only caveat is that it came in with rough corners, and had a kind of dirty (sticky?) back cover. The mailing process does not seem to be responsible for this, as the book was well packed. It does not affect the actual content of the book, all pages are in great condition. Thank you!

Jonathan –

Formally not always exact or complete, but a very good overview and introduction. Also, the figures are very well made. Recommendable but not perfect.

Niccolo’ B. –

Ottimo testo che contiene quasi tutti gli argomenti fondamentali del machine learning, spiegati in maniera molto chiara e allo stesso tempo abbastanza dettagliata.